Although artificial intelligence is bringing about earth-shaking changes in human life, many people do not consider that artificial intelligence also has many security risks, especially its core deep learning technology is facing many potential threats. As deep learning applications become more widespread, more and more security issues are beginning to emerge.

Recently, 360 Security Research Institute combined with the detailed research on the security of deep learning system in the past year, issued the "AI Security Risk White Paper", the white paper pointed out: software implementation vulnerability in the deep learning framework, malicious sample generation against machine learning, training Data pollution, etc. may lead to confusion in the identification system driven by artificial intelligence, resulting in missed or misjudged, even causing the system to crash or be hijacked, and can make the smart device a zombie attack tool.

Figure 1: Google AlphaGo Go is amazingly global

In recent years, the artificial intelligence technology represented by Google AlphaGo has astounded the world. Everyone is shocked by the high computing power of artificial intelligence and the careful logic. However, one fact is that AlphaGo's deep learning technology works only in a closed environment and does not interact directly with the outside world, so it faces relatively small security threats. In addition, other applications that are of interest are often assumed to be in a well-intentioned or closed scene. For example, the input in high-accuracy speech recognition is naturally acquired, and the input in the picture recognition is also from the normal photograph. These discussions are not Consider scenarios that are considered malicious constructs or composites.

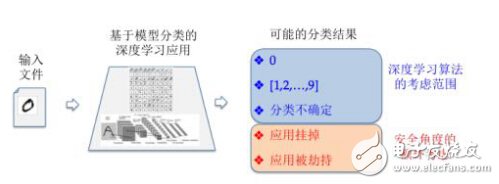

Taking the most typical handwritten digit recognition in artificial intelligence as an example: the handwritten digit recognition application based on MNIST data set is a very typical example of deep learning. The classification result discussed by the algorithm layer only cares about the approximation degree and confidence probability interval of a specific category. Failure to consider input can cause the program to crash or even be hijacked by the attacker. The output that is ignored is a reflection of the gap between the algorithm and the implementation, which is the so-called artificial intelligence security blind spot.

Figure 2: Different learning scenarios for deep learning algorithms and security considerations

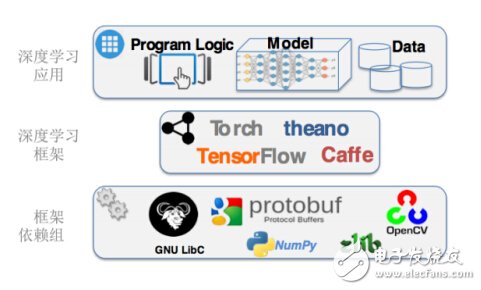

At present, the deep learning software that has been developed is basically implemented in the deep learning framework. Application developers can build their own neural network models directly on the framework and train the models using the interfaces provided by the framework. However, while this framework simplifies the design and development of deep learning applications, it also hides risks because of the complexity of the system.

A rather embarrassing fact is that as long as the deep learning framework and any components it depends on are vulnerable, the application system built on the framework will also be threatened. In addition, modules often come from different developers, and the interfaces between modules often have different understandings. When this inconsistency leads to security issues, module developers may even think that other modules call substandard rather than their own problems.

Figure 3: Deep learning framework and framework component dependencies

In order to grasp the security risks of deep learning software, the 360 ​​Team Seri0us team discovered dozens of software flaws in the deep learning framework and its dependent libraries within one month, including almost all common types of vulnerabilities, such as memory access violations, Pointer references, integer overflows, divide-by-zero exceptions, etc. Once exploited by criminals, these vulnerabilities may lead to deep learning applications facing risks such as denial of service, control flow stagnation, classification escaping, and potential data pollution attacks.

Therefore, while advancing the application of artificial intelligence technology, we must focus on solving the hidden dangers and making the technology better serve human beings. As the largest Internet security company in China, the future 360 ​​will be dedicated to helping the industry and manufacturers solve the safety problems of artificial intelligence and improve the safety factor of the entire artificial intelligence industry.

The anti-glare technology used in the Matte Protective Film can reduce glare to eliminate eye fatigue, and make it easier to watch under direct light, which is more friendly to your eyes.

In order to let you enjoy it for a long time, the Frosted Screen Protector uses durable military-grade TPU material, which has strong durability and scratch resistance. It protects the screen from unnecessary scratches.

It has good anti-fingerprint ability, sweat will not remain on the screen surface, and it is easy to clean without affecting touch sensitivity or response speed.

The adhesive layer ensures that you stick the Protective Film in a stress-free manner and maintain a strong adhesion without leaving any sticky residue.

If you want to know more about Matte Screen Protector products, please click the product details to view the parameters, models, pictures, prices and other information about Matte Screen Protector.

Whether you are a group or an individual, we will try our best to provide you with accurate and comprehensive information about the Matte Screen Protector!

Matte Screen Protector, Frosted Screen Protector, Matt Screen Protector, Matte Hydrogel Film, Matt Protective Film, Anti-Glare Screen Protector

Shenzhen Jianjiantong Technology Co., Ltd. , https://www.jjtphonesticker.com